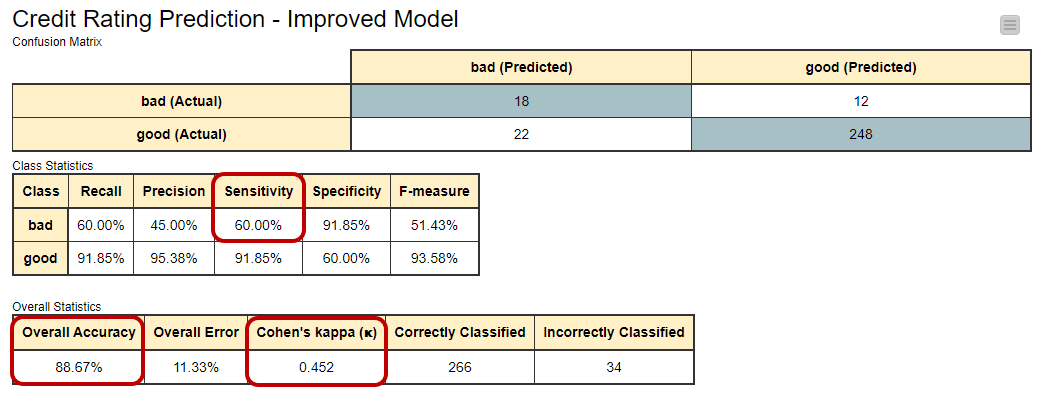

Cohen's Kappa and classification table metrics 2.0: an ArcView 3.x extension for accuracy assessment of spatially explicit mo

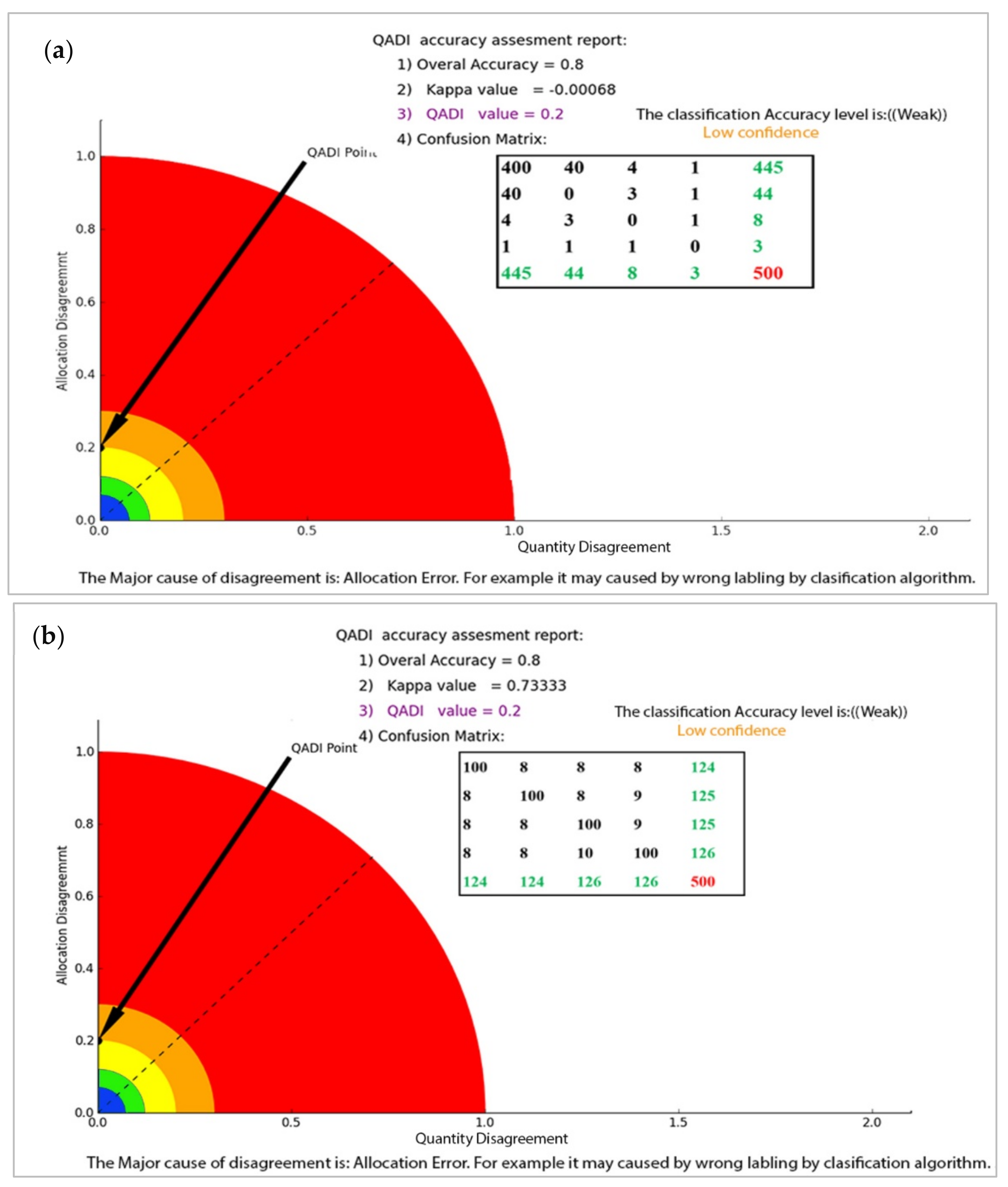

Sensors | Free Full-Text | QADI as a New Method and Alternative to Kappa for Accuracy Assessment of Remote Sensing-Based Image Classification

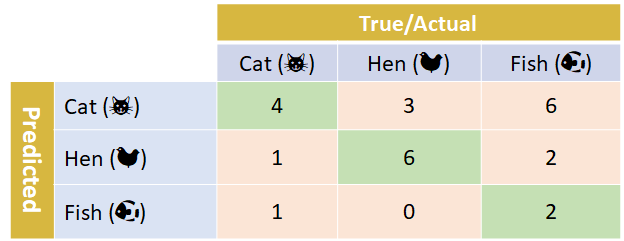

Multi-Class Metrics Made Simple, Part III: the Kappa Score (aka Cohen's Kappa Coefficient) | by Boaz Shmueli | Towards Data Science

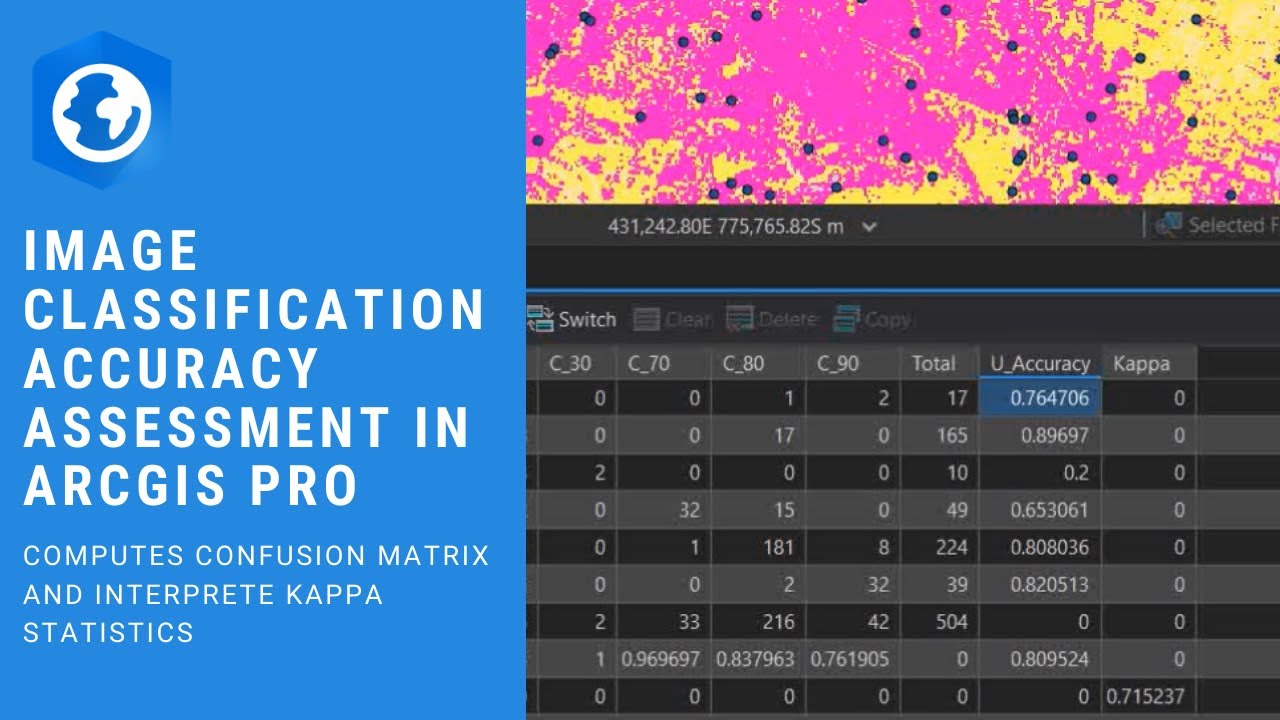

Accuracy Assesment of Image Classification in ArcGIS Pro ( Confusion Matrix and Kappa Index ) - YouTube

![Suggested ranges for the Kappa Coefficient [2]. | Download Table Suggested ranges for the Kappa Coefficient [2]. | Download Table](https://www.researchgate.net/profile/Shivakumar-B-R/publication/325603545/figure/tbl2/AS:669212804653076@1536564174670/Suggested-ranges-for-the-Kappa-Coefficient-2.png)